Introduction

Definition of Ethical AI

Ethical artificial intelligence (AI) refers to the application of moral principles and ethical standards in the development, implementation and use of AI technologies. The main goal is to ensure that AI systems are designed and used in a way that respects human rights, promotes fairness, and minimizes bias and discrimination.

In other words, it is ensuring that AI technologies benefit society as a whole without causing harm or exacerbating existing inequalities.

Importance of ethics in AI development

The development of AI raises complex ethical questions due to the power and ubiquity of these technologies. AI systems can make decisions that have significant impacts on the lives of individuals, whether in the areas of health, employment, justice, or security.

The main reasons for the importance of ethics in AI are:

- Respect for human rights : avoid discrimination and injustice

- Promoting fairness: ensuring algorithms do not replicate human biases

- Privacy protection: ensuring the confidentiality of user data

- Transparency: making algorithmic decisions understandable and explainable

- Accountability: ensuring that AI developers and users are accountable for their actions

Presentation of Microsoft as a major player in the field of ethical AI

Microsoft is one of the leading companies in the field of artificial intelligence and has made significant commitments to promote ethics in the development of its AI technologies. Here are some of Microsoft's guiding principles for AI:

- Transparency

- Responsibility

- Confidentiality

- Security

- Equity

These principles are integrated into every phase of development and deployment of Microsoft AI solutions.

Microsoft has also developed tools and frameworks to help developers build ethical AI systems, such as:

- Fairlearn : to detect and mitigate bias

- InterpretML : to make AI models more explainable

Additionally, Microsoft collaborates with researchers, regulators, and industry partners to develop standards and best practices for ethical AI.

Microsoft's commitment to ethical AI is also reflected in its internal governance, with the formation of ethics committees and councils dedicated to monitoring and guiding company practices.

These efforts position Microsoft as a key player in promoting and adopting ethical standards in the artificial intelligence industry.

I - The challenges of ethical AI

Bias and discrimination

Algorithmic biases pose a major challenge to AI ethics. Algorithms can, intentionally or unintentionally, reproduce existing biases in training data, which can lead to unfair or discriminatory decisions.

Concrete examples of bias in AI systems

- Automated recruiting favors certain demographic groups

- Discrimination in access to employment, credit, and other essential services

Social and economic consequences

- Reinforcement of social inequalities

- Discrimination in access to employment, credit, and other essential services

Transparency and explainability

Importance of transparency

- Allows users to understand how and why a decision was made

- Strengthens the accountability and acceptability of AI systems

Challenges related to explainability

- Complexity of deep learning models

- Difficulty in explaining the internal decision-making processes of algorithms

Impact on user trust

- Increased distrust of AI systems

- Risks of non-acceptance or rejection of AI technologies

Privacy and data protection

Privacy is another critical challenge for ethical AI. AI systems often process massive amounts of personal data, raising concerns about confidentiality and data misuse.

Data Privacy Issues

- Collection and storage of sensitive data without adequate consent

- Use of personal data for purposes not initially intended

Examples of AI Violations of Privacy

- Invasive monitoring applications

- Data leaks and cyberattacks targeting AI systems

Current regulations and legislation

- General Data Protection Regulation (GDPR) in Europe

- Data protection laws in various countries

Security and reliability

AI systems must be secure and reliable to avoid potentially catastrophic failures. AI security is about both protecting against external attacks and ensuring that systems function as intended.

Security risks of AI systems

- Vulnerability to cyber attacks aimed at manipulating results

- Malicious use of AI, such as deepfakes or automated attacks

Failure cases and their implications

- Self-driving cars involved in accidents due to detection errors

- Trading Algorithms Causing Disruption in Financial Markets

Importance of reliability in critical applications

- Ensuring that systems operate in a predictable and stable manner

- Minimizing risks in sensitive areas such as health, finance, and public safety

These challenges underscore the importance of developing ethical frameworks and robust practices to ensure that AI technologies are deployed responsibly and beneficially for society as a whole.

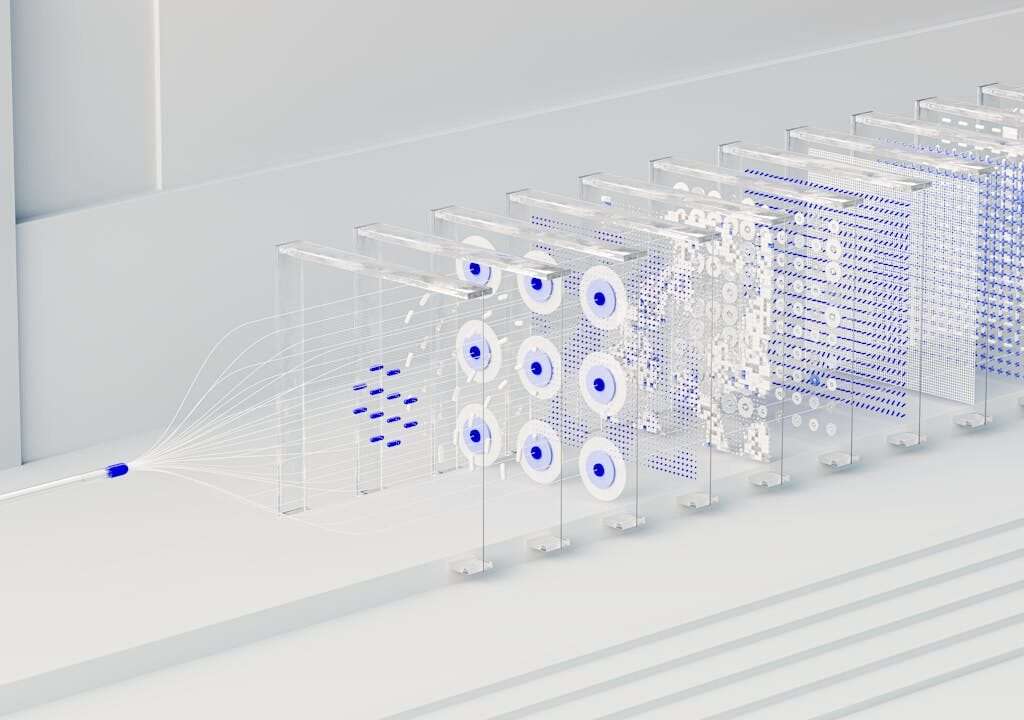

II - Solutions proposed by Microsoft for responsible development of AI

Azure offers a variety of storage options, each designed to meet specific needs. Understanding these options is crucial to choosing the best solution for your data storage requirements.

Ethical development frameworks and tools

Fairlearn

Fairlearn is an open-source tool developed by Microsoft to assess and mitigate bias in AI models. It helps developers measure the fairness of models by providing specific metrics and offers techniques to reduce inequalities.

For example, Fairlearn has been used in automated recruitment projects to ensure fair evaluation of candidates and avoid discrimination based on gender or ethnicity.

InterpretML

InterpretML is designed to make AI models more explainable. This tool offers explainability techniques that help understand how models make decisions. It is particularly useful in critical industries like healthcare, where professionals need to understand the recommendations of AI systems.

For example, InterpretML has been used to explain the decisions of medical diagnostic algorithms, allowing doctors to validate and understand treatment suggestions.

Internal policies and governance

AI, Ethics, and Effects in Engineering and Research (AETHER) Committee

The AETHER Committee at Microsoft oversees AI development practices to ensure they comply with ethical standards. This committee evaluates ongoing projects and provides guidance to integrate ethics, transparency, and accountability into all aspects of AI development

Regulations and compliances

Microsoft adheres to strict data protection and privacy regulations, such as the GDPR in Europe. The company also implements internal policies to ensure that development practices comply with international laws and standards, thereby strengthening trust and security around its AI technologies.

Collaboration and partnerships

Academic partnerships

Microsoft is collaborating with leading academic institutions, such as the University of Cambridge, to advance research into the societal impacts of AI. These partnerships aim to develop innovative techniques while considering the ethical and social implications of AI technologies.

Initiatives with NGOs

The company also partners with non-governmental organizations like the Partnership on AI and the Data & Society Research Institute to promote the responsible use of AI. These collaborations help share knowledge, develop best practices, and create global standards for AI ethics.

Training and awareness

Educational programs

Microsoft offers free online courses through Microsoft Learn on ethical AI principles. These programs cover topics such as transparency, fairness, and accountability and are designed to equip developers with the skills needed to integrate ethical practices into their projects

Workshops and seminars

The company regularly organizes workshops and seminars to raise awareness among stakeholders about best practices in ethical AI. These events bring together experts from various fields to discuss challenges and solutions in AI development, facilitating an exchange of knowledge and perspectives.

These initiatives demonstrate Microsoft's commitment to developing AI technologies that are not only innovative but also responsible and beneficial to society.

III - Initiatives and commitments for the future

Research and development initiatives

Microsoft is investing heavily in research and development to strengthen the ethics and responsibility of AI. A key initiative is the AI for Earth program, which uses AI to solve environmental problems. The program supports projects that conserve water, protect biodiversity, and sustainably manage agricultural resources.

For example, AI has been used to improve weather forecasts and provide accurate agricultural recommendations, helping farmers reduce losses and increase yields.

Another crucial aspect is Microsoft’s FATE (Fairness, Accountability, Transparency, and Ethics in AI) lab, which focuses on studying the societal implications of AI. The lab works on projects to ensure that AI systems are fair, transparent, and ethical. FATE collaborates with academic institutions and civil society organizations to develop innovative techniques while taking into account social justice issues.

Awareness and education programs

Courses and certifications

Microsoft is offering free online courses on ethical AI principles through its Microsoft Learn platform. These courses cover topics such as transparency, fairness, and accountability, and are designed to equip developers with the skills needed to integrate ethical practices into their projects. This helps raise awareness among a broad audience and ensure that developers have the knowledge needed to build responsible AI systems.

Commitments to Diversity and Inclusion

AI for Accessibility

Microsoft has launched specific programs like AI for Accessibility, aimed at developing AI solutions that improve the lives of people with disabilities. This program funds projects that use AI to create assistive technologies, improving accessibility and digital inclusion for all.

For example, applications using AI to transcribe and translate speech in real time for people with hearing loss have been developed through this program.

Inclusive Design

Microsoft also promotes the concept of Inclusive Design, which involves designing technology products and services that are accessible to everyone from the start. This approach ensures that the needs of users with diverse abilities are considered in the design process, making technologies more usable for a broader population.

For example, Microsoft has developed features in its products like Windows and Office to make them more accessible to people with visual or motor disabilities.

IV - Conclusion

Ethical AI is a crucial issue for contemporary technological development. Faced with the challenges posed by algorithmic bias, transparency, data protection and security, it is essential to implement rigorous and responsible practices. Microsoft is firmly committed to this path by developing tools and frameworks such as Fairlearn and InterpretML, which help measure and mitigate bias while making algorithmic decisions more understandable

Microsoft’s internal policies and governance, such as the AETHER Committee, provide ongoing oversight of development practices to ensure they comply with ethical standards. By collaborating with academic institutions and NGOs, Microsoft strives to promote global ethical practices and share knowledge essential for responsible AI development.

Training and awareness programs, as well as diversity and inclusion initiatives, demonstrate Microsoft’s commitment to a holistic approach to AI ethics.

By investing in these areas, the company aims to create AI technologies that are not only innovative but also equitable and beneficial for all of society.

The future of AI depends on our ability to integrate strong ethical principles into every step of its development. Microsoft, through its ongoing efforts and clear commitments, demonstrates that innovation can go hand in hand with responsibility and ethics. For developers and businesses, adopting these practices is not only a moral obligation but also a necessity to build a more just and equitable world through technology.